The efficiency and cost are as comprehensive as possible! Digital Infinite AI-Stack creates a planning era for enterprises AI Infra

In order to help enterprises make good use of GPU computing resources that continue to grow efficiency and follow the "Super Ferrori Law" in a cost-effective and sustainable way, digital unlimited INFINITIX's AI-Stack solution can use more advanced AI computing power adjustment and optimized technology to create flexible AI infrastructure, thereby accelerating the AI transformation journey from model training, recommendation services to generative artificial intelligence (GenAI) applications, and agent artificial intelligence (Agent AI).

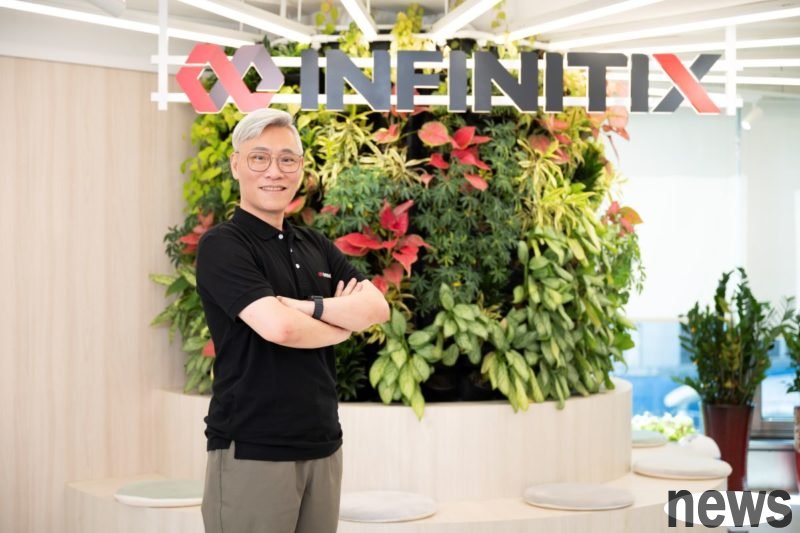

Digital Infinite INFINITIX Executive Chairman Chen Wenyu said that the computing efficiency of NVIDIA AI chips has long surpassed Morrow's law, and their chip efficiency has increased by 26 times in 6 years. With the launch of NVIDIA H200 chips in 2024, it not only shows the development trend of GPUs that will be equipped with more and faster memory in the future, but also comprehensively unveils the prelude to the global entry into the era of high-performance AI construction.

However, the side effect of GPU performance renovation is that the purchase cost continues to rise, with the price difference between the NVIDIA K80 and the 2022 H100 increased by 13 times, fully reflecting the increase in cost pressure. Chen Wenyu suggested that how to effectively use GPU computing power has undoubtedly become a key topic for current enterprises to promote AI applications.

In addition to AI computing power management, comprehensive AI-based infrastructure management is the keyThe current evolution of AI is moving towards four-part plots such as model training, recommendation services, GenAI and Agent AI, and abundant GPU computing power is needed here as a strong backbone for the development and deployment of AI applications. However, due to different computing power activation situations at each stage, the current AI-based facility management faces challenges such as lack of control and priority, low utilization and high cost, poor visibility and decision-making, and insufficient GPU resources.

Chen Wenyu said that due to the above challenges, in addition to managing AI computing resources well, more comprehensive AI-based infrastructure management is the top priority. In this regard, enterprises must do a good job in supporting hybrid work loads, computing power configuration, and cross-platform AI chips. Digital Infinity’s AI-Stack not only does this work well, but also helps enterprises build innovative AI-based infrastructure.

In addition to supporting AI learning training tasks, AI-Stack also supports high-performance computing (HPC) work loads. The platform cleverly combines the concepts of containerization technology, HPC and decentralized computing, and applies it to the field of deep learning, thereby realizing the maximum efficiency of computing efficiency and cost.

In terms of computing power configuration, AI-Stack supports various computing power combinations to meet computing power needs in different situations, and can enclose old versions of GPUs into GPU resource pools for use. In the integration of cross-platform computing power resources, in addition to supporting the full series of NVIDIA and AMD GPUs, digital is infinite and is built with Taiwan's AI accelerator/NPU manufacturers such as Creative Intelligence Neuchips, and even group-connected aiDAPTIV+ solutions to jointly create a cooperative ecosystem.

Supports single-piece cutting and multi-piece aggregation to show powerful GPU adjustment capabilitiesThe AI-Stack platform has three core technologies: GPU single-piece cutting, multi-piece aggregation and cross-spot computing, demonstrating powerful GPU adjustment capabilities. Chen Wenyu pointed out that in addition to the Run:ai purchased by NVIDIA, there are currently very few manufacturers in the world that have the capability of single-piece GPU cutting technology. Through this technology, the ultimate efficiency with higher computing resource utilization and lower operating costs is no different for enterprises with limited GPU resources but with multiple small model tasks to handle.

As for GPU multi-chip aggregation technology, it can greatly improve computing efficiency, especially for the application of super-large models. Furthermore, through cross-stop computing technology, AI-Stack can assign training tasks to multiple sessions according to needs, and then use decentralized training technology to form multiple containers to process huge amounts of data in parallel, greatly reducing the burden and time of model training. It can be called a weapon for decentralized deep learning training or HPC work load.

AI-Stack, which adopts a split-layer architecture, provides a full range of services from physical integration to control layer to development and ecological layers, meeting various GPU management needs in one-stop. In the physical layer, the platform can simultaneously perform precise control and control GPU chips/servers, storage devices and network equipment through exclusive hardware control technology.

In the control layer, the platform provides a single management portal and monitoring interface that centrally manages all computing resources. Managers can set control policies and principles, conduct comprehensive control management including allocation, security, renter and fee, and coupled with a role-based access control mechanism, it can ensure the optimal configuration and security of data and resources.

At the development level, the platform provides a work environment based on Kubernetes and Docker, and helps developers to model design, training, experiment and deployment. Users (including development engineers, AI scientists, end users and third-party partners) can open AI containers or self-services based on specific rights and regulatory policies.

Chen Wenyu said that regardless of creating a high-availability production environment or service failure, it can be fulfilled in the development layer.. In addition, Web-based AI-Stack console can realize automated management and service opening operations, and even those who are not good at bottom-level hardware and technology principles can shorten the past weekly AI service opening operations to a point of minutes through simple policy formulation and direct point-of-care.

For many years, Digital Infinite has been committed to accelerating the development, training and recommendation of AI models through AI-Stack's machine learning operation (MLOps) capabilities, and realizing the automation and simplification of life cycle management of all AI applications, including model development, micro-tuning and training to prompt engineering, service production environment, etc. The company is therefore able to deepen the AI applications developed by AI-Stack in different industries.

Now AI-Stack has been widely used in various industries, and the platform customers are roughly divided into two categories: enterprise users and computing power center customers. Among them, corporate customers cover semiconductors, manufacturing, finance, science, energy, transportation, medicine and other fields. Digital Infiniteness and participates in the "Digital Industry Cross-Domain Software Baseboard and Digital Service Upgrade Plan" launched by the Digital Development Department, successfully completing the construction of the GPU computing power sharing platform, which will greatly help accelerate the implementation of Taiwan's newly-created AI applications.

In the academic world, there are many important customers who love AI-Stack, including universities such as Chengda University, Peking University, Political Science and Technology University and Yishou. Chen Wenyu thankfully pointed out that among them, Success University was the first guest of the AI-Stack platform, and used AI-Stack to build the management core of the large AI resource platform. Since Digital Infinity is a member of the founding team of the prototype system of Taiwan AI Cloud (TWCC), the prototype system "Cloud GPU Software Service" (TWGC), its operating interface is very familiar to universities that have applied for the National Highway Network Center (NCHC), and it is of great help to accelerating the introduction of AI resources and the launch of new systems.

In addition to the combination of digital unlimited AI-Stack and jointly launched AI-OCR solutions, Jingcheng Group's subsidiary "Neiqiuhui Intelligent Technology" has specially based on its own Advanced RAG technology, and at the same time combines digital unlimited AI-Stack, the National Science and Technology Association TAIDE model and NVIDIA GPU chips to form an AI machine.

In addition, under the promotion of Japanese agency partner Macnica, Union Tool, a leading manufacturer of TEL semiconductor manufacturing equipment and a well-known PCB diamond manufacturer, has also become a favorite of AI-Stack. The company used the platform's preset policies to successfully implement automation of GPU resource allocation and management, and eliminated the burden and trouble of manually allocating GPU resources for containers or development environments.

Partners' biological systems are connected in a large number of ways to gradually open up international marketsDigital Infinity has three major channel agents in Taiwan. In addition to the earliest Zero One Technology, it also includes Maolun and Dunxin Technology. Maolun is now a subsidiary of Macnica, the world's fifth largest IC semiconductor channel device. At this year's GTC Conference, the first digital infinity to be exhibited is to display its AI-Stack platform on the tablets of Mauron and Renbao computers.

Before this, Digital Infinity and Renbao launched a GPU server solution equipped with AI-Stack, providing "start-out-of-use" AI model recommendation service. Chen Wenyu further pointed out that an important goal of the company's promotion of the AI-Stack platform with Renbao and other partners is to realize the "unboxing" of the hardware and the "start-out-of-use" of the software/model.

Digital infinite positive combination of different channel agents expanding market versions and brand awareness. Currently, overseas markets are mainly Japan, South Korea, Malaysia and Thailand. They are currently planning to extend their reach to the Philippines, Indonesia, Central East, East Europe and the United States. It is expected that the European and American market layout is expected to be completed in the fourth quarter of this year.

The company's next major market operation strategy will be based on a gradually built path system, connecting ISV independent software developers, servers, storage, edges and AI applications and other partner ecosystems to further expand the channels of the international market, and use CSP cloud service suppliers and computing power centers as the next target.